Research

新着情報 What's News

リンク Link

Operation of Mobile Robot in Snowy Environment [ June 2021 ~ Present ] |

Research Background |

|

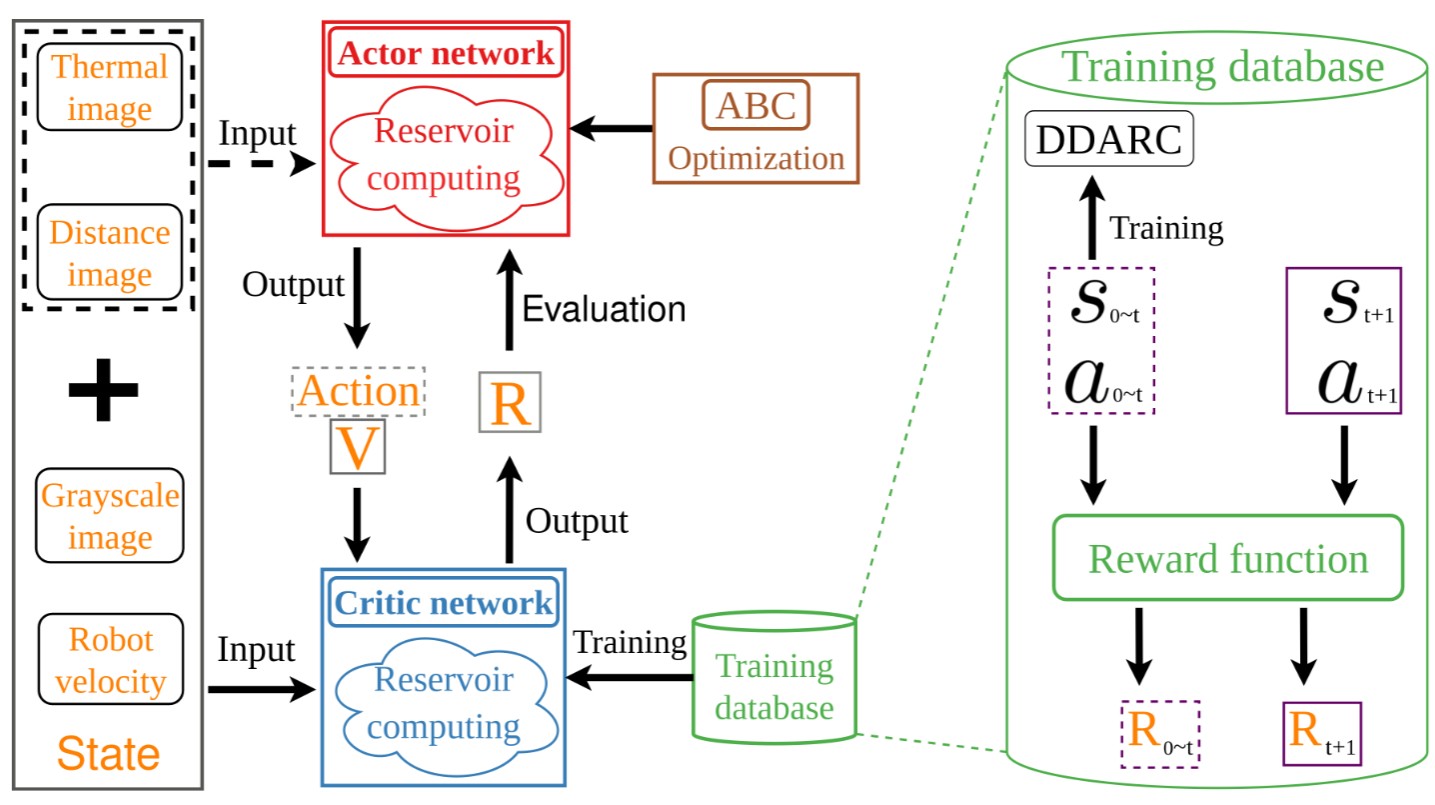

Self-adaptive Autonomous Navigation System for Outdoor Robot in Snowy Environments In order to reduce the burden on the elderly in heavy snowfall areas, it is important to support snow removal work with robots. This study develops an autonomous navigation system in snowy environments. To overcome the limitations of conventional learning-based methods that require extensive retraining, the system employs reservoir computing (RC) combined with reinforcement learning (RL) and heuristic optimization, enabling the robot to continuously learn and adapt in real-world environments, as shown in Fig. 1. k-means-based segmentation method is introduced to detect snow-covered regions and support navigation toward snow-removal tasks. The framework integrates snow-region segmentation, obstacle avoidance, and motion control into a unified navigation pipeline. Experiments conducted in both simulated and real snowy environments demonstrate robust and reliable navigation performance.  Fig. 1 System overview of autonomous navigation based on RC combined with RL in snowy environments. Mov. 1 Self-adaptive autonomous navigation in snowy environments. |

|

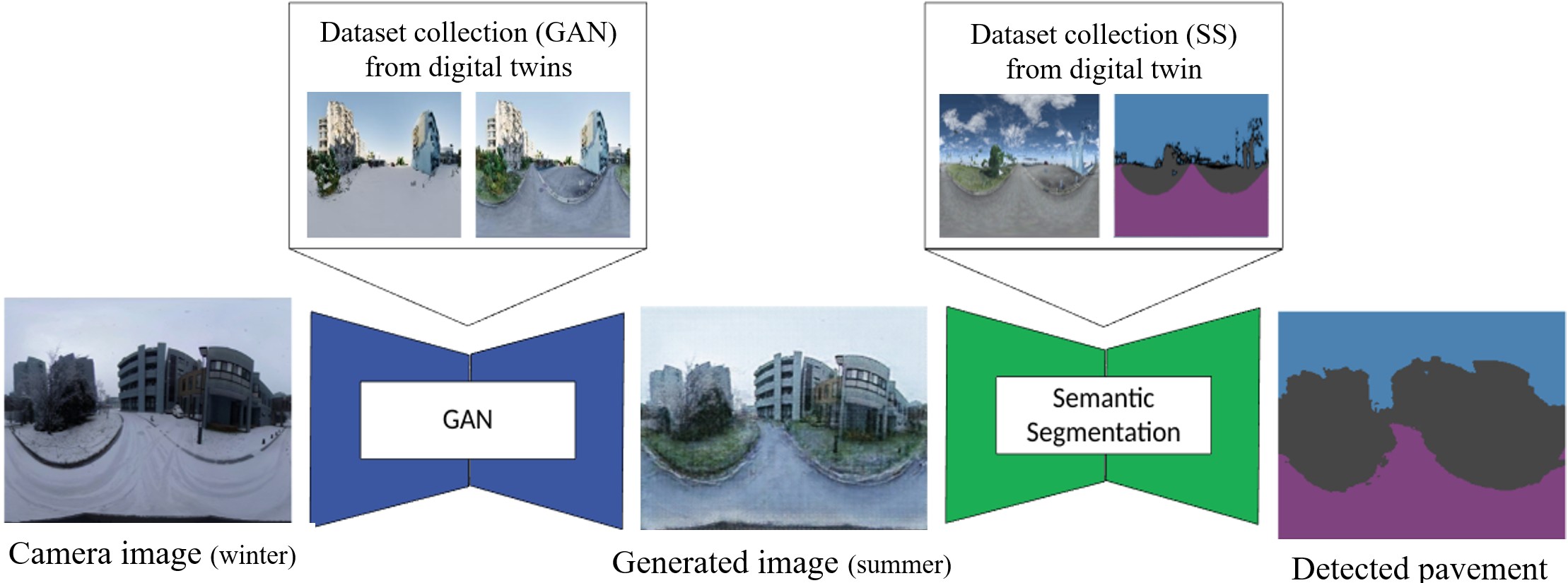

GAN-Based Pavement Road Detection in Snowy Environments Using Digital Twins for Sim2Real In snowy environments, it is impossible to operate a mobile robot along snow-covered roads because pavement detection is difficult when using camera images. To over come this imitation, we suggest an approach that can perform traversable area detection in snowy environments based on generative adversarial networks (GANs). In our approach, winter images captured from a camera are transformed into summer images based on the GAN, and the area of the snow-covered pavement is automatically detected by applying semantic segmentation. However, collecting huge datasets for the GAN and semantic segmentation is a very tedious task that requires enormous effort and time. To address this issue, we propose a novel Sim2Real framework that automatically collects realistic image datasets from digital twins as virtual environments as shown in Fig. 2. To achieve Sim2Real, it is essential to bridge the domain gap between the virtual and real-world environments. Hence, we perform domain transformation to build realistic 3D models of environments in both summer and winter situations as digital twins as shown in Movs. 2 and 3. The experimental results show that using the GAN model trained in the digital twins enables accurate conversion of winter images to snow-free summer images, improving the performance of detecting snow-covered pavement. In addition, the central point of the detected road area was extracted as sub-goal for the motion control of the mobile robot; thus, the mobile robot is able to follow snow-covered road stably, as shonw in Mov. 4.  Fig. 2 Overview of the proposed framework for traversable area detection based on Sim2Real using digital twins. Mov. 2 3D digital twin construction in summer situation. Mov. 3 Domain transformation to 3D winter model. Mov. 4 Motion control of mobile robot on detected road area in sonwy environment. |

Related Paper |

|

• Yugo Takagi, Fangzheng Li, Reo Miura, and Yonghoon Ji, "Motion Control of Mobile Robot Using Semantic Segmentation in Snowy Environment – Temporally Consistent Image-to-Image Translation from Winter to Summer Based on GAN –," International Journal of Automation Technology, Vol. 19, No. 4, pp. 575-586, July 2025. •三浦 玲和, 池 勇勳, "Structure from motionによる3次元モデルを利⽤した全⽅位カメラ画像の走行可能領域検出," ビジョン技術の実利用ワークショップ2024講演論文集 (ViEW2024), 横浜, December 2024. • Fangzheng Li and Yonghoon Ji, "Dual-Type Discriminator Adversarial Reservoir Computing for Robust Autonomous Navigation in a Snowy Environment," Proceedings of the 2024 21th International Conference on Ubiquitous Robots (UR2024), New York, USA, June 2024. • Jiaheng Lu and Yonghoon Ji, "Autonomous Navigation with Route Opening Capability Based on Deep Reinforcement Learning by Material Recognition," Proceedings of the 2024 21th International Conference on Ubiquitous Robots (UR2024), New York, USA, June 2024. |